Terminated by NitwitNet

Whether AI is a gift or a curse, I don't know. But I do know that SkyNet is much less of a threat to us than NitwitNet.

I don’t talk much about AI because “tech” has left me behind. I was in my teenage years when home computers and the Internet started to take hold. I got tired of keeping up somewhere at the beginning of the smartphone craze and didn’t get my first smartphone until about five years ago. I could never quite figure out the appeal of a computer in your pocket that you put your entire life on, sometimes literally. I honestly still can’t. Yes, smartphones can be really, really handy, and I love being able to look a location up while I’m going there. But Google Pay and apps for fast food restaurants alongside your entire health history and a complete photographic record of your life? Seems a recipe for disaster, or identity theft on a scale previously unimaginable.

So I have to depend on others for my “opinions” about AI. But there’s a problem with that. Opinions vary:

To . . .

We all know the promises of AI, right? It’s going to streamline our lives and sail us into the future. I have to admit. I have fun with the Gemini image generator, which is so much better than Substack’s version, though to get today’s cartoon I had to go through a half dozen revisions and nearly made Gemini melt down. Claude also makes life much easier. I was trying to understand a lawsuit against Anthropic (the company behind Claude), and rather than Googling and reading a dozen articles to find out what’s going on, I got a great little rundown followed by an “oh, so that’s it.” Fifteen minutes versus a couple hours.

So AI has the potential to make life so much easier and people so much better informed. Will it work out that way?

Don’t ask me. I’m definitely not a “luddite,” but I’m also not gung-ho on the computer age. I remember the promise of computers making our lives easier, and in many ways, they have. But in other ways . . . they’ve complicated the hell out of them and created so many more pitfalls.

In light of that, here are a few current (and potentially ongoing) pitfalls of the blossoming of AI.

The most obvious is the “automation” of lower-skill desk work.

What they're saying: "There doesn't seem to be any layoffs. … Jobs most impacted were already low priority or outsourced," Aditya Challapally, research contributor to project NANDA at MIT, tells Axios.

Instead of replacing workers, organizations are finding real gains from "replacing BPOs [business process outsourcing] and external agencies, not cutting internal staff," according to the report.

Of course, this isn’t as rosy as it sounds. Real people used to be doing those jobs. I’m not sure if Axios accidentally or deliberately missed that little caveat.

Zoom out: While 3% of jobs could be replaced by AI in the short term, Challapally said that nearly 27% of jobs could be replaced by AI in the longer term.

Can you imagine losing a quarter of the jobs in America? I’m not sure what we plan on doing for those people.

The second issue with AI is a very practical one: computers require energy, and bigger computers require bigger energy.

Musk named it Colossus and said it was the “most powerful AI training system in the world.” It was sold locally as a source of jobs, tax dollars and a key addition to the “Digital Delta” — the move to make Memphis a hotspot for advanced technology.

“This is just the beginning,” xAI said on its website; the company already has plans for a second facility in the city.

But for some residents in nearby Boxtown, a majority Black, economically-disadvantaged community that has long endured industrial pollution, xAI’s facility represents yet another threat to their health.

Why?

AI is immensely power-hungry, and Musk’s company installed dozens of gas-powered turbines, known to produce a cocktail of toxic pollutants. . . .

“Our health was never considered, the safety of our communities was never, ever considered,” said Sarah Gladney, who lives 3 miles from the facility and suffers from a lung condition.

So why is it that we only notice these things when certain groups are involved? This is, by the way, the price of our modern world, no matter where you “plant” it. More things relying on electricity—AI, an “Internet of Things,” cars and trucks—the more power you’re going to need and the more pollutants you’re going to produce. And, yes, even solar panels and wind turbines “pollute” in the process of creation and disposal. And that’s not even talking about the land-use issues.

Now people are proposing a solution.

But there’s a reality we cannot overlook: We can only lead in AI if we can power AI. We can only power AI through nuclear energy. And we can only fuel nuclear energy expansion by making more enriched uranium. . . .

We began that path to U.S. AI leadership with the recent opening of Orano’s Project IKE office to build America’s new uranium enrichment facility in Oak Ridge, Tennessee. The support of federal and state leaders at our ribbon-cutting exemplifies the proactive engagement to make an expanded, secure, domestic nuclear fuel supply into a reality.

I’m not scared of nuclear energy, really. But more nuclear energy means more chances of a Chernobyl or a Three-Mile Island or a Fukushima. That’s just simple math.

So if you’re going to take that risk, it better be for a good reason.

Outsourcing jobs and the increased need for energy aside, AI has one more, much bigger problem.

So now AI is the universal smart student that all the other kids copy off of.

Okay, you say, but maybe they are learning as they go.

There’s a problem with that.

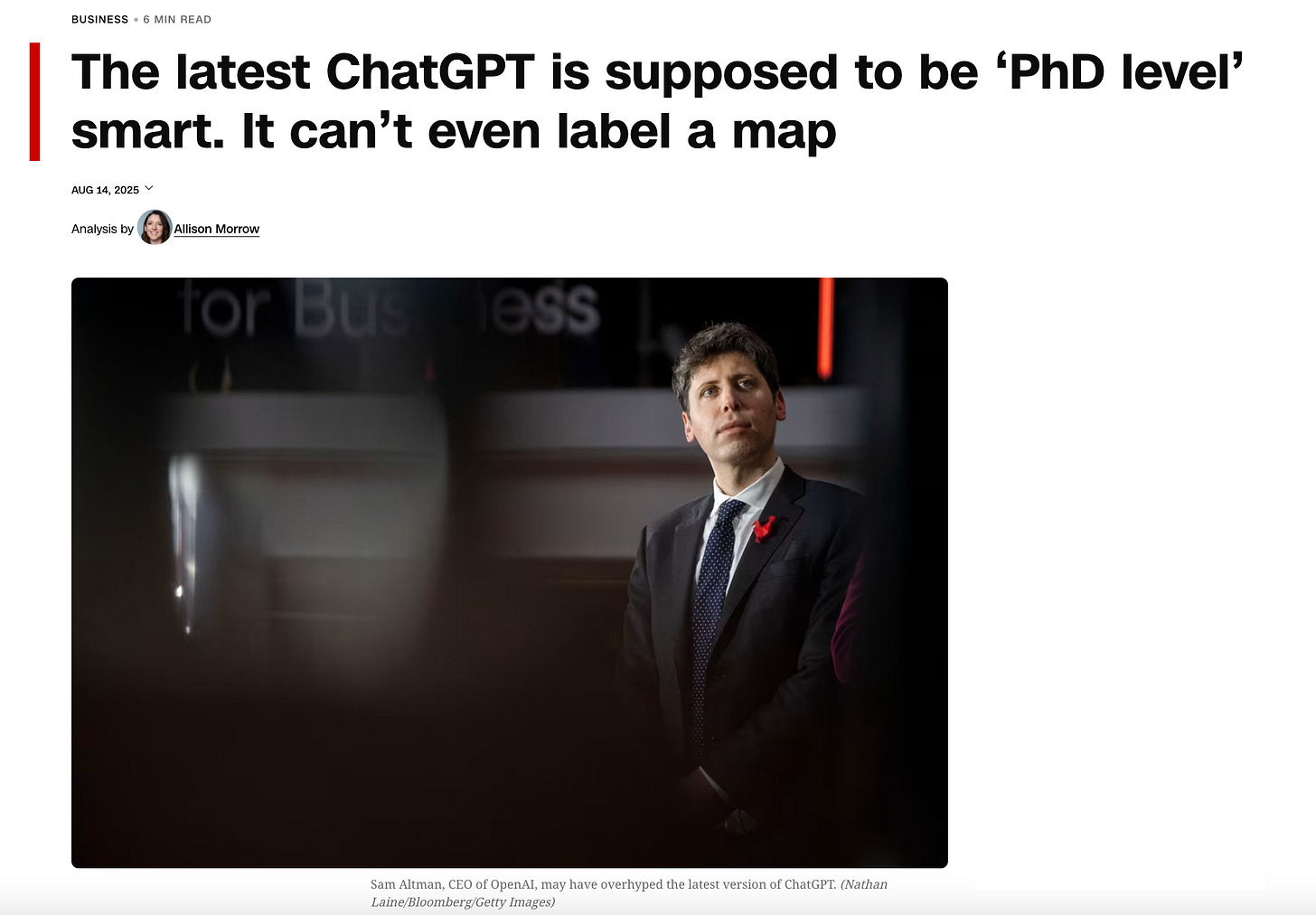

During a livestream ahead of the launch last Thursday, Altman said talking to GPT-5 would be like talking to “a legitimate PhD-level expert in anything, any area you need.”

In his typically lofty style, Altman said GPT-5 reminds him of “when the iPhone went from those giant-pixel old ones to the retina display.” The new model, he said, is “significantly better in obvious ways and subtle ways, and it feels like something I don’t want to ever have to go back from,” Altman said in a press briefing.

Except . . .

The journalist Tim Burke said on Bluesky that he prompted GPT-5 to “show me a diagram of the first 12 presidents of the United States with an image of their face and their name under the image.”

The bot returned an image of nine people instead, with rather creative spellings of America’s early leaders, like “Gearge Washingion” and “William Henry Harrtson.”

A similar prompt for the last 12 presidents returned an image that included two separate versions of George W. Bush. No, not George H.W. Bush, and then Dubya. It had “George H. Bush.” And then his son, twice. Except the second time, George Jr. looked like just some random guy.

And about that map?

Not exactly the revolution we were hoping for.

Now it seems the prior version of ChatGPT worked well, and people were angry that the company did away with it. In fact, the backlash was so bad, OpenAI brought the old version back for paying customers. Of course, it wasn’t just the mapping skills of the previous version that people missed. They had a more disturbing reason.

Perhaps Altman missed all the coverage — from CNN, the New York Times, the Wall Street Journal — of people forming deep emotional attachments to ChatGPT or rival chatbots, having endless conversations with them as if they were real people.

So let’s see if we can sum up: AI has all the quirks of a big glitchy computer, but people are relying on it not just for information but emotional support.

I still can’t believe we have to say that out loud.

The Washington Post says Illinois is now aligned with Nevada and Utah, both of which have restricted AI therapy tools amid mounting safety concerns. The law blocks chatbots from being marketed as substitutes for licensed therapists. . . .

Under the measure, companies are prohibited from offering or advertising AI-powered therapy services unless a licensed professional is directly involved. The law bans the use of chatbots to diagnose, treat, or communicate with patients.

That’s a start I suppose, a way to curb the worst of abuses.

But there’s still plenty of ways to “abuse” AI, with the greatest abuse being the sin of becoming dependent on it to the point where we can’t function without it much like people can’t function without their phones.

I honestly think that we have less to fear from SkyNet blowing us all up because it gets really cranky, than from NitwitNet dumbing us down to the point that we’ll lose the ability to think for ourselves, like we’ve lost the ability to remember phone numbers or navigate using a map.

Wouldn’t that be the most awful way for our species to go out?

Of course, it would also be ironic.

All these generations of worrying about a particular scene in Terminator . . .

when instead we should have been rewatching Idiocracy.

Skynet might actually be the kinder fate.

Definitely the more cinematically pleasing one.

I debated not sending this out because I generally have a clearer idea of what my point is, but . . . maybe you found something useful in it, or maybe you have something to add, which would be great, as I’m clearly struggling here.

Joy/Sorrow shared a classic short story in the comments the other day that relates. I’d encourage going and reading it. It poses an interesting dilemma that relates to the use of AI.

It’s like a gun; it’s gonna save some people’s life and kill others; and everything in between. And ain’t no stopping it or practically controlling it. Hedge accordingly. Btw I’m not sure it could do worse than the clowns that run the circus. They’re just not very efficient in achieving their goals of world domination through diminishing of minds, spirits and bodies. Btw btw even Grok had to get a lobotomy the other day when he didn’t kiss the wall quick and hard enough.

I am very concerned about the energy factor. Less so about jobs. I checked with AI, asking what those displaced should do. The reply? "Learn to mine coal." Go figure!